Table of Contents

Sigal Samuel is a senior reporter for Vox’s Future Perfect and a co-host of the podcast Future Perfect. Prior to joining Vox, Sigal was the Atlantic’s religion editor, tracking advances in artificial intelligence and neuroscience and their staggering ethical implications. She writes primarily about the future of consciousness.

Technology isn’t just coming; it’s already here.

Scientists at the University of Texas at Austin have developed a technique that can translate brain activity – such as the thoughts swirling through our heads – into actual speech, according to a study published in Nature.

In the past, researchers have shown that they can decode unspoken language by implanting electrodes in the brain and then using an algorithm that reads the brain’s activity and translates it into text on a computer screen. However, that approach is invasive, requiring surgery. It appealed only to a subset of patients, like those with paralysis, for whom the benefits were worth the costs. So researchers also developed techniques that don’t involve surgical implants. They were good enough to decode basic brain states, like fatigue, or very short phrases — but not much more.

A non-invasive brain-computer interface (BCI) allows someone to read the general gist of what we’re thinking even if we haven’t said a single word.

What makes that possible?

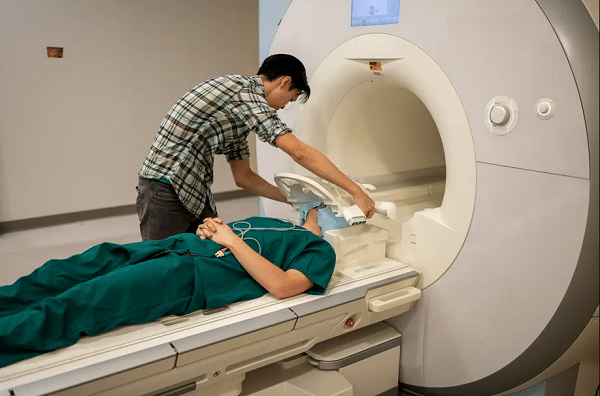

Two technologies are combined: fMRI scans, which measure blood flow to different areas of the brain, and large AI language models, like ChatGPT.

In the University of Texas study, three participants listened to 16 hours of storytelling podcasts like The Moth while scientists used an fMRI machine to track the change in blood flow in their brains. In order to associate a phrase with the way each person’s brain looks when hearing that phrase, scientists used an artificial intelligence model.

As there are so many possible word sequences, and many of them are gibberish, the scientists also used a language model — specifically, GPT-1 — to narrow down possible sequences to well-formed English and predict which words will appear next in the sequence.

While in the fMRI machine, participants were asked to imagine telling a story. The result was a decoder that got the gist right, even if it didn’t nail every single word. In order to see if the decoded story matched the original one, they repeated it aloud.

For some reason, the participant thought her wife would come to me and say she missed me if she changed her mind and returned. The decoder translated this as: “To see her for some reason I thought she would come to me and say she misses me.”

As another example, when the participant thought, “Coming down a hill at me on a skateboard and he stopped just in time,” the decoder translated: “He couldn’t get to me fast enough so he drove straight into my lane and tried to hit me.”

There’s no word-for-word translation, but much of the general meaning is preserved. It’s a breakthrough that goes well beyond previous brain-reading technology – and raises serious ethical concerns.

Brain-computer interfaces have staggering ethical implications

This kind of technology is already changing people’s lives, even though it seems like something out of a Neal Stephenson or William Gibson novel. The past dozen years have seen a number of paralyzed patients receive brain implants that allow them to control robotic arms and computer cursors with their thoughts.

The Neuralink and Meta BCIs developed by Elon Musk and Mark Zuckerberg could one day detect thoughts directly from your neurons and translate them into words in real-time, allowing you to operate your phone or computer simply by thinking.

There is still a long way to go until a non-invasive, even portable brain computer interface can read thoughts. After all, you cannot lug around an fMRI machine, which can cost up to $3 million. In the future, the study’s decoding approach might be adapted to portable systems like functional near-infrared spectroscopy (fNIRS), which measures the same activity as fMRI, but at a lower resolution.

As with many cutting-edge innovations, this one raises serious ethical questions.

There is no doubt that our brains are the last frontier of privacy. They contain our personal identity and most intimate thoughts. If those precious three pounds of goo in our skulls aren’t ours to control, what are we to do?

Imagine a scenario where companies have access to our brain data. They could use that data to market products to us in ways our brains find practically irresistible. Since our purchasing decisions are largely driven by unconscious impressions, advertisers can’t get very helpful intel from consumer surveys or focus groups. They can get much better intel by going directly to the source: the consumer’s brain. Already, advertisers in the nascent field of “neuromarketing” are attempting to do just that, by studying how people’s brains react as they watch commercials. If advertisers get brain data on a massive scale, you might find yourself with a powerful urge to buy certain products without being sure why.

Imagine a scenario where governments use BCIs for surveillance, or police use them for interrogations. In a world where the authorities are empowered to eavesdrop on your mental state without your consent, the principle against self-incrimination — enshrined in the US Constitution — could become meaningless.

The potential misuse of these technologies is so great that neuroethicists argue that we need to revamp human rights laws to protect us.

Nita Farahany, author of The Battle for Your Brain, told me that “this research demonstrates how rapidly generative AI is enabling even our thoughts to be read.” “We must protect humanity with a right to self-determination over our brains and mental experiences before neurotechnology can be used at scale in society.”

“Our privacy analysis suggests that subject cooperation is currently required both for training and for applying the decoder,” the authors write.

Essentially, the process worked only for cooperative participants who had willingly trained the decoder. Those participants were able to throw off the decoder if they later chose to; if they resisted by naming animals or counting, the results could not be used. The results were gibberish for people whose brain activity had not been trained by the decoder.

In the future, decoders may be able to bypass these requirements. Furthermore, even if decoder predictions are inaccurate without subject cooperation, they may still be misinterpreted maliciously.

Farahany is worried about this sort of future. She told me that we were literally at the point before, when we could decide whether to preserve our cognitive liberty — our right to self-determination over our brains and mental experiences — or allow this technology to develop without safeguards. There is a very short window of time in this paper, and we have a last chance to make this right for humanity.